My Comments on Andrea’s Posts

My Comments on Hangyodon’s Posts

My Comments on Sekhon’s Posts

I wonder if I put a few words here?

My Comments on Andrea’s Posts

My Comments on Hangyodon’s Posts

My Comments on Sekhon’s Posts

INTRODUCTION

In this blog, I’m going to explore DALL-E 2, an AI tool that creates images from text prompts, and see if it could be useful in an educational setting. I use ChatGPT every day to help debug my code, but I’ve never tried DALL-E before, so this is going to be a new experience for me. I’ll also be using ChatGPT to guide my analysis and applying the SAMR model to figure out if DALL-E 2 can actually enhance learning. Along with that, I’ll touch on some ethical concerns, like how AI might impact classrooms and what we should think about before bringing these tools into education.

Using DALL-E 2

I wanted to test DALL-E 2’s creativity, so I gave it the prompt, ‘Sheep playing baseball on a rainy day with friends.’ The AI-generated image captured the whimsical scene quite well. Then, I tried another prompt: ‘ Dog winning Olympic gold in 100m sprint’ The result was equally amusing, with a dog winning Olympic gold on the podium. These kinds of images could be used in a creative writing or art class to inspire students to develop stories based on visual prompts. For students who might not be comfortable with drawing or painting, DALL-E 2 provides an accessible way to express creativity and engage with visual content in a way that feels more approachable.

Fig. 1 DALL-E 2 generated this image based on the prompt ‘Sheep playing baseball on a rainy day with friends.’

Fig. 2 DALL-E 2 generated this image based on the prompt ‘ Dog winning Olympic gold in 100m sprint.’

SAMR Model Analysis (with ChatGPT’s Help)

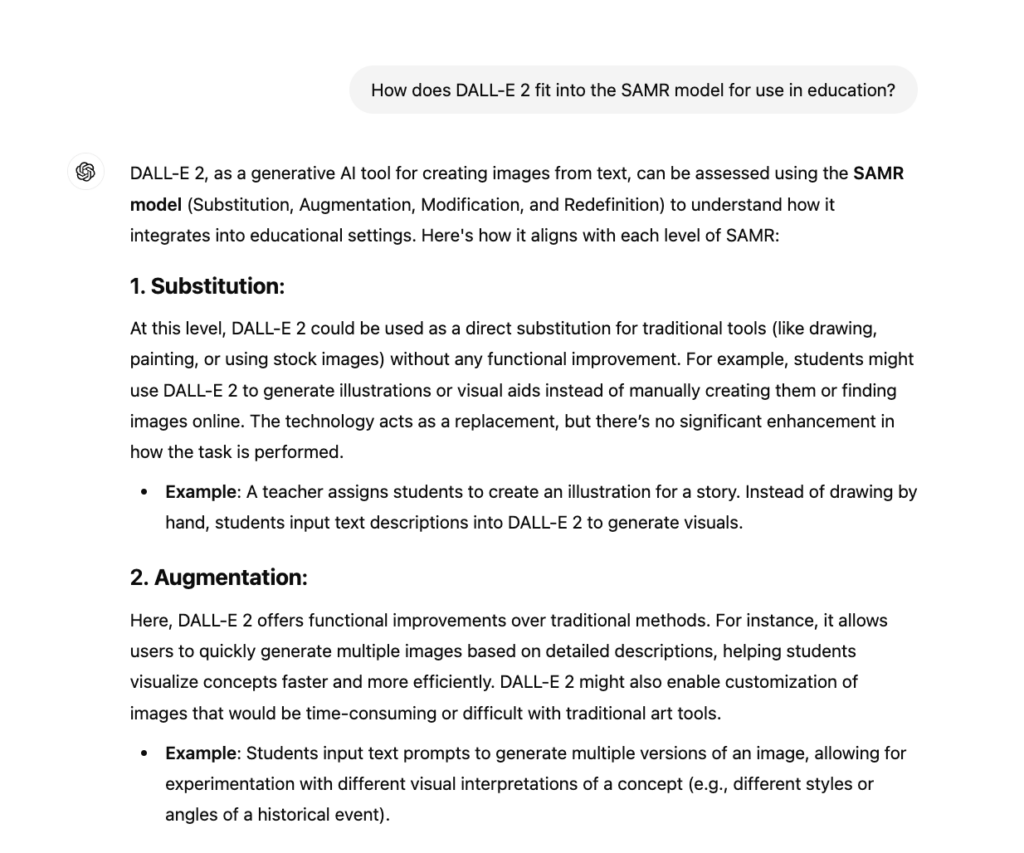

To help me better understand how DALL-E 2 fits into the SAMR model, I asked ChatGPT for a breakdown. ChatGPT explained how the tool could be used at each stage, from replacing traditional art supplies to redefining how students can engage with creative content in the classroom. Here’s the breakdown it provided:

Fig. 3 ChatGPT helped outline DALL-E 2’s fit within the SAMR model, guiding my analysis of its use in education.

After going through ChatGPT’s breakdown, I’ve got my own thoughts on how DALL-E 2 fits into education:

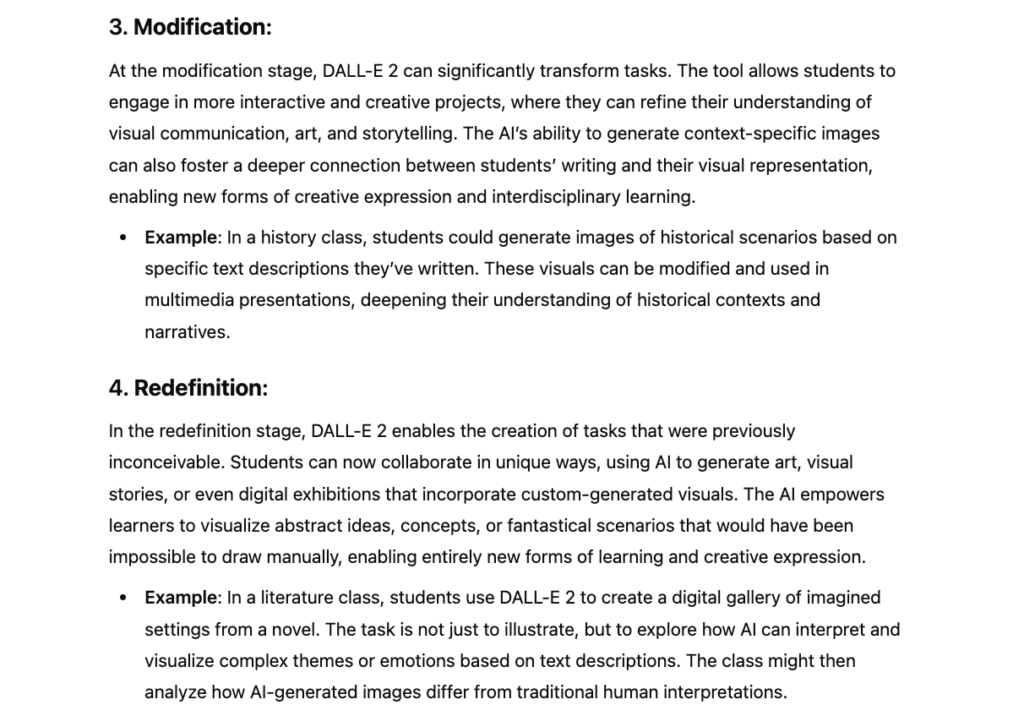

Ethical Considerations

I asked ChatGPT for a concise summary since I was getting too much unnecessary information at first. I just wanted the key points, so I typed “concise” to speed up the process, and it worked! Here’s what ChatGPT came back with, breaking down the major issues like bias, copyright, and privacy in a clear, straightforward way. It was pretty helpful, especially when I was looking to keep things simple.

Fig. 4 ChatGPT’s response explaining the ethical concerns of using DALL-E 2 in education.

Reflection

Using ChatGPT and DALL-E 2 for this assignment was actually pretty fun and eye-opening. Like, I didn’t expect DALL-E 2 to do such a good job with my random prompts like “sheep playing baseball on a rainy day.” It was super cool to see how it handled that. The way it generates images so quickly is something I can see working well in classrooms, especially in art or creative writing. It really speeds things up when you’re trying to come up with ideas.

One thing I noticed is that while DALL-E 2 makes things easier, there’s a risk of students relying too much on it and missing out on learning how to do things themselves. Like, it’s great for visuals, but what about developing actual drawing skills? Also, the ethical issues are pretty real like, I hadn’t really thought about how the AI might pull from copyrighted images or how bias in the dataset could sneak into the images. So yeah, while it’s an awesome tool, we have to be careful with how it’s used.

Conclusion

So, overall, I think DALL-E 2 is a super useful tool for learning, especially when it comes to making visual content. It’s really accessible for students who might not have the skills to draw or paint but still want to create something cool. It fits into the SAMR model pretty well, especially at the higher levels where it can redefine learning by allowing students to collaborate or create things they wouldn’t have been able to do by hand.

Looking ahead, it’s clear that tools like DALL-E 2 could play a big role in making education more interactive and creative.

Citations

“How does DALL-E 2 fit into the SAMR model for use in education?” prompt, ChatGPT, OpenAI, 12 Oct. 2024, chat.openai.com/.

“What ethical concerns are there when using AI tools like DALL-E 2 in education concise?” prompt, ChatGPT, OpenAI, 12 Oct. 2024, chat.openai.com/.

“Sheep playing baseball on a rainy day with friends” prompt, DALL-E, version 2, OpenAI, 12 Oct. 2024, labs.openai.com/.

“Dog winning Olympic gold in 100m sprint” prompt, DALL-E, version 2, OpenAI, 12 Oct. 2024, labs.openai.com/.

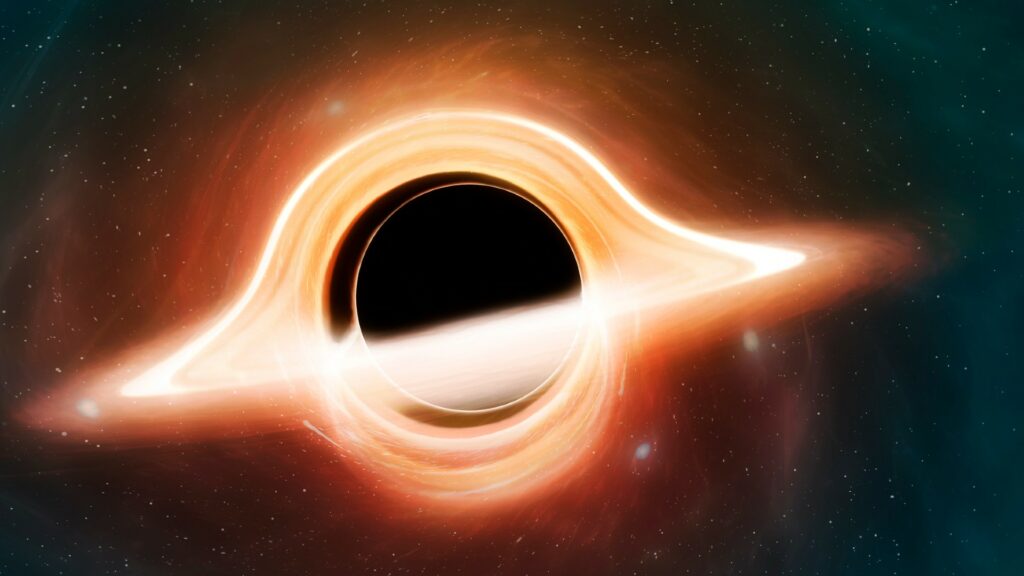

Hey everyone! So for this first blog post I have created a video about life cycle of Stars and how black holes form and look like. I used Screencastify to record my screen and used Capcut to edit it and incorporated animations, images, and scientific concepts to explain how black holes form. Most of animations came from sources such as Stargaze, Science Channel, Discovery, National Geographic.

I imagined the audience the class of EDCI 337, students from different programs and majority who might not be super familiar with black holes or life cycle of stars but are super curious about it.

As I applied Mayer’s principles, I realized how important it is to think about how people are going to absorb the information. I found the signaling and coherence principles pretty easy to implement, but redundancy was harder. I kept wanting to add extra text to explain things better, but I had to stop myself.

I found using screencastify and the video editor a tough task and I feel like I’ve got a better handle on how to design multimedia that actually helps people learn.

© 2026 EDCI 337 – Raghav Chadha

Theme by Anders Noren — Up ↑

Recent Comments